Human infants acquire various cognitive abilities in the first few years of life. Although behavioral changes in infants have been closely investigated, the underlying mechanisms for the development are not yet completely understood. We proposed a theory of cognitive development based on predictive learning of sensorimotor information. Our robot experiments demonstrated that various cognitive functions such as self-other cognition, imitation, goal-directed action, and prosocial behavior can be acquired through sensorimotor predictive learning, whereas traditional studies have modeled them using different architectures (Nagai & Asada, 2015).

Emergence of Mirror Neuron System

The mirror neuron system (MNS) is a group of neurons that discharge both when people are executing an action and when they are observing the same action performed by other individuals. Such “mirroring” function enables people to better understand and anticipate the goal of others’ actions and to imitate them. We developed a neural network model by which a robot acquires the MNS through sensorimotor predictive learning. The model learns the association between motor neurons and visual representations, while the visual acuity gradually improves as in infants. Our experiment demonstrated that the robot learned to recognize the self and other based on their predictabilities and that the MNS emerged as a byproduct of the development of self-other cognition (Nagai et al., 2011; Kawai et al., 2012).

Emergence of Prosocial Behavior

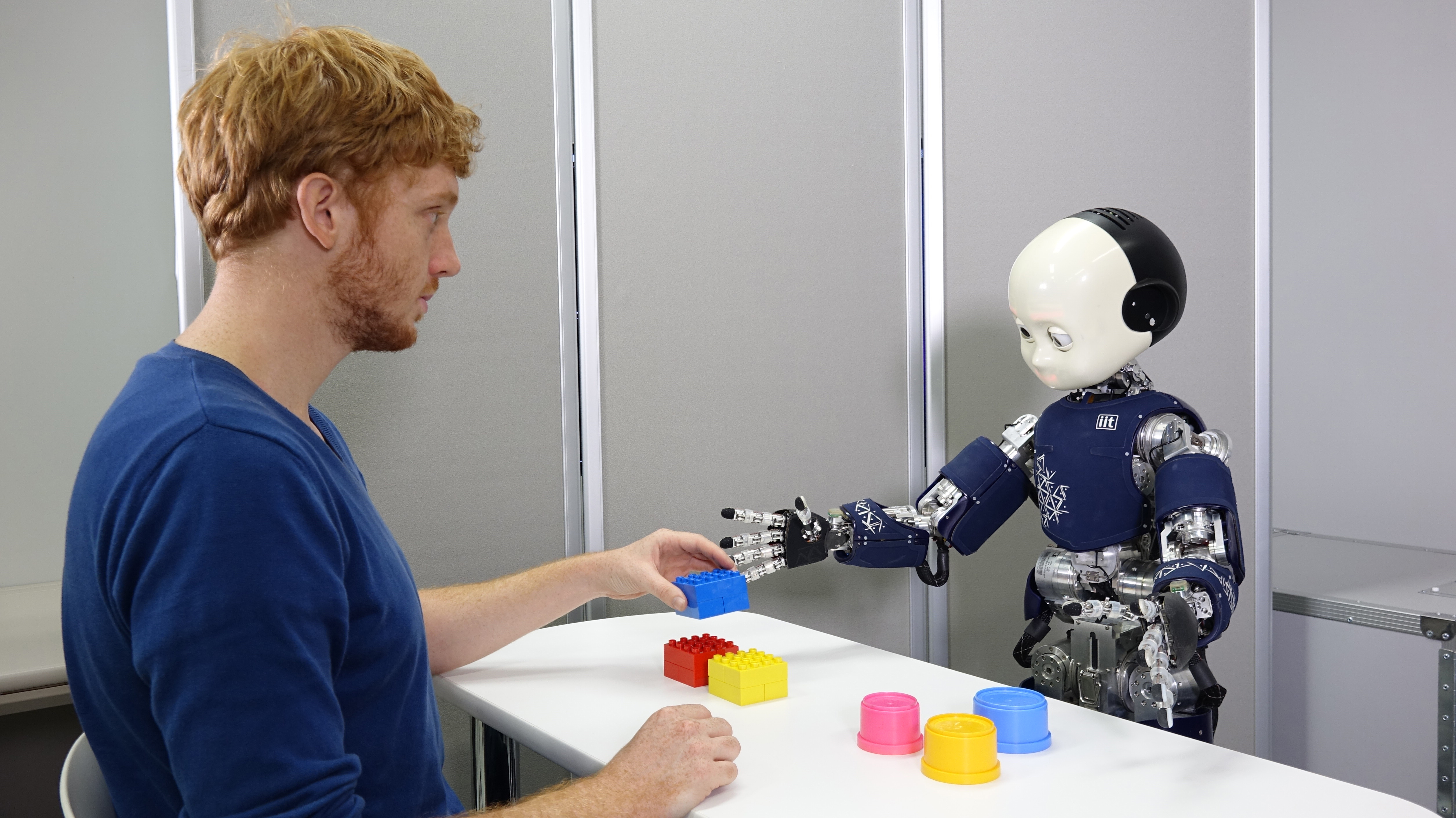

Although it is well known that infants tend to help other persons even without expected rewards, what motivates them to behave altruistically is an open question. We hypothesized that the minimization of prediction error is a potential mechanism for infants to show prosocial behaviors. Our hypothesis suggests that infants first learn to generate actions through sensorimotor predictive learning and adopt their predictor to recognize how the sensory state changes over time. Then, if other persons fail in achieving an action goal, infants detect prediction error, which triggers their own actions in order to minimize the prediction error. Our experiment demonstrated that a model based on the minimization of prediction error enabled a robot to produce prosocial behaviors when the robot detected actions in its action experiences (Baraglia et al., 2014).

Development of Emotion

Behavioral studies of infants have shown that pleasant/unpleasant state gradually differentiates into emotional states such as happiness, anger, and sadness over the first few years of life. We proposed a probabilistic neural network model, which hierarchically integrates multimodal information, to reproduce emotional development observed in infants. Emotional states are represented as the activation of neurons at the highest layer of the network. Our key idea is that tactile interaction between an infant and a caregiver plays an important role in emotional development because the tactile sense can inherently discriminate pleasant and unpleasant signals and has a better acuity than in other modalities in early infancy. Our experiment using actual sensory signals from interaction demonstrated that typical emotional categories developed only when the tactile sense was endowed with the above functions (Horii et al., 2013).